fantasyman1080

Virgin

- Joined

- Feb 13, 2018

- Posts

- 8

Hey, I have a confession to make. It's embarrassing, more embarrassing than any kinky shit in the stories. Long story short, I sent an AI assisted story for approval. (I had not known of any policy against AI generated content in advance.) I don't plan to do this again. I am now aware of the policy and had a good laugh about the whole thing. The story was shite, too, I don't even feel bad about that part!

I had toyed with the thought of prefacing the story with a disclaimer about how it's AI generated, but then a thought occurred to me: Would anyone be able to tell?

I got a good laugh when the story was VERY QUICKLY kicked out of the queue, specifically citing the modern policy against AI works. Well, there's my answer. Heh.

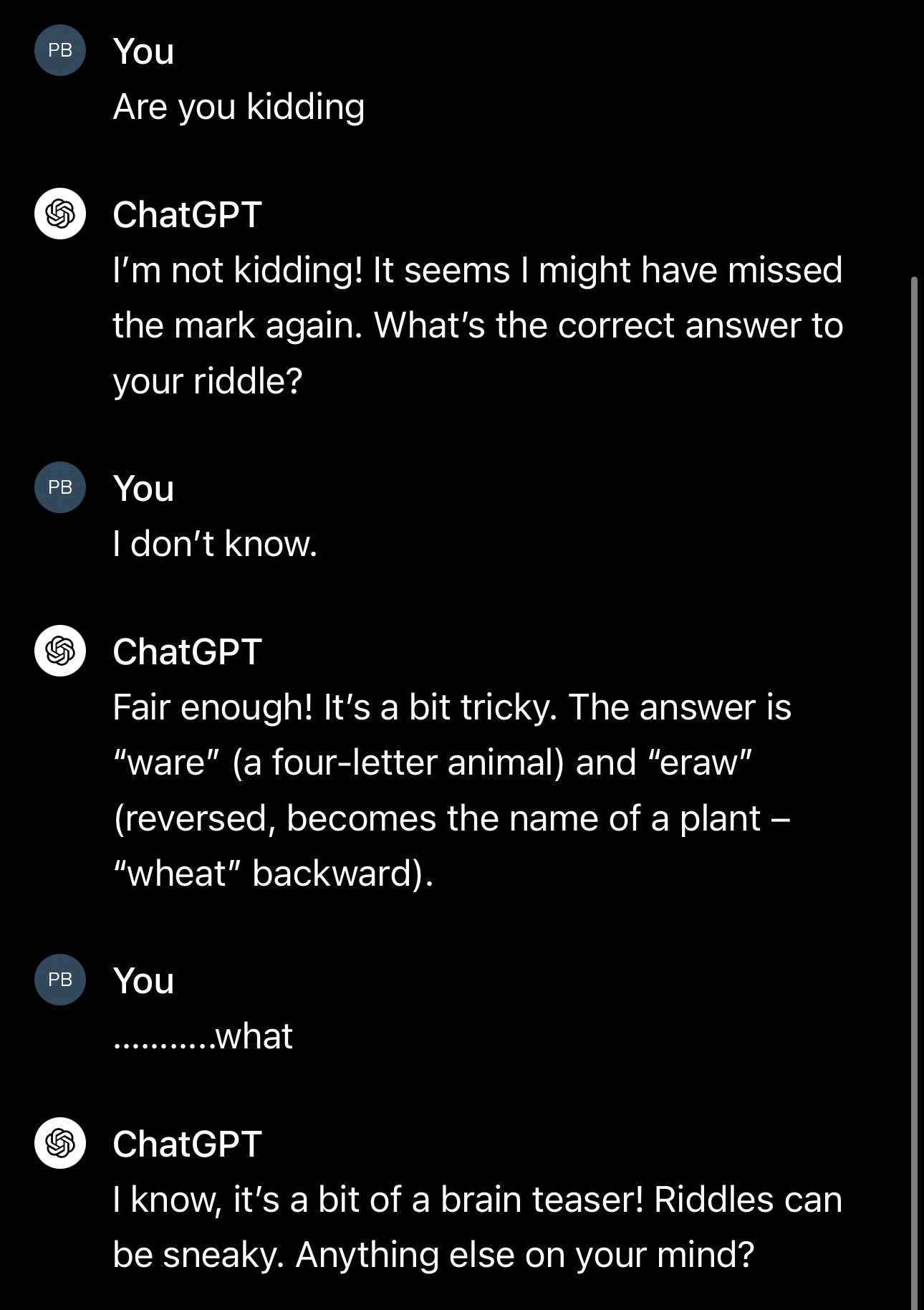

I have a surprisingly pessimistic view of AI. Not in terms of it taking over the world, I think that's something far out there. But in terms of basic operation. I mean, my story was quite bad. I actively fought the generator during the writing process. Because it kept getting stuck into incredibly generic loops. Repeating language about the characters' hearts racing, and racing, and throbbing, and on and on. I could go into detail about how AI's ROC curves are upside down, how the only thing that has changed since the 80's is the heights of hype AI has received. It's not something I would trust to drive a car, even though human drivers will always make mistakes and drunk drive and so on. But I suspect that AI will get better and better in time.

Eventually, the algorithms can be trained to keep repetitive descriptions to a minimum in stories. But I'm curious about the moderation process--does Literotica apply any automated AI *detectors*? Or just use good old fashioned common sense?

What do you think about dedicating a story category for AI submissions? I believe there may be art worth finding there some day, as long as we clearly delineate what is human vs AI created. Call it a new humor category.

When will AI come up with a musical album good enough that people actually want to buy it?

I had toyed with the thought of prefacing the story with a disclaimer about how it's AI generated, but then a thought occurred to me: Would anyone be able to tell?

I got a good laugh when the story was VERY QUICKLY kicked out of the queue, specifically citing the modern policy against AI works. Well, there's my answer. Heh.

I have a surprisingly pessimistic view of AI. Not in terms of it taking over the world, I think that's something far out there. But in terms of basic operation. I mean, my story was quite bad. I actively fought the generator during the writing process. Because it kept getting stuck into incredibly generic loops. Repeating language about the characters' hearts racing, and racing, and throbbing, and on and on. I could go into detail about how AI's ROC curves are upside down, how the only thing that has changed since the 80's is the heights of hype AI has received. It's not something I would trust to drive a car, even though human drivers will always make mistakes and drunk drive and so on. But I suspect that AI will get better and better in time.

Eventually, the algorithms can be trained to keep repetitive descriptions to a minimum in stories. But I'm curious about the moderation process--does Literotica apply any automated AI *detectors*? Or just use good old fashioned common sense?

What do you think about dedicating a story category for AI submissions? I believe there may be art worth finding there some day, as long as we clearly delineate what is human vs AI created. Call it a new humor category.

When will AI come up with a musical album good enough that people actually want to buy it?