joy_of_cooking

Literotica Guru

- Joined

- Aug 3, 2019

- Posts

- 1,167

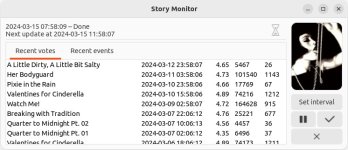

Hey everyone, I just figured out how to download my story stats without manually clicking the link:

The "..." is specific to your login session. Once you're logged in to the site in a web browser, you can get your auth token by using the network inspector to record the outgoing request when you click the link in your browser. I don't yet know how long-lived this value is.

Bash:

curl https://literotica.com/api/3/submissions/my/stories/published.csv --cookie 'auth_token=...'The "..." is specific to your login session. Once you're logged in to the site in a web browser, you can get your auth token by using the network inspector to record the outgoing request when you click the link in your browser. I don't yet know how long-lived this value is.