lovecraft68

Bad Doggie

- Joined

- Jul 13, 2009

- Posts

- 46,364

Of course every generation thinks the next one is lazier and softer, and I didn't put too much stock into it, but I think the birth of the internet itself gave it some merit, and AI makes it full blown truth. My grand kids are not being taught how to write because...who writes any more? By the time he's old enough to drive it will be self driving carsEvery generation has said that about the generation coming after them, but now it actually seems to have some merit.

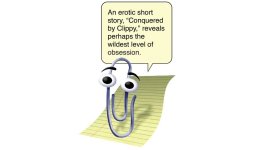

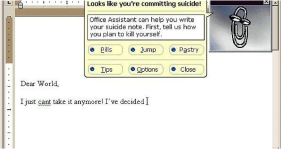

but its the ability to reason, think, and problem solve that AI is removing, as well as any type of creativity. "Look AI wrote a story for me, I'm a writer! Look, it made this picture, I'm an artist!"

There's an ad for Canva on You tube where the woman pulls up some program and it runs an office event for her, and it ends with 'what will you create next?' and my wife-who is nowhere near as cynical as I am-says "You didn't create anything for fuck's sake, the program did it"