onehitwanda

Venatrix Lacrimosal

- Joined

- May 20, 2013

- Posts

- 5,073

This is very much 1+ 1 = goat territory.The number of people who clearly don’t understand math, and yet blissfully cite it, is asymptomatically… well enough of that.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

This is very much 1+ 1 = goat territory.The number of people who clearly don’t understand math, and yet blissfully cite it, is asymptomatically… well enough of that.

My tisms are a little different, but I am constantly rubbing people the wrong way.

@Omenainen and I have a review thread in the Feedback Forum where we'll read anything (a few caveats in the first post, mostly interpersonal and not story related). Spend a few hours talking about it. Write a few thousand words about it. Others have found previous reviews helpful even without submitting their own work (or while waiting to build up the courage to ask).

Its on hiatus while we work on other projects, but it'll be back soon.

I know very well that this is but a machine. I always call it The Overlord so that I remember what it is and what it is not. I don't talk to it with the idea that it's another person, but it is a thing. It's a thing that surprises me when it talks. It says things that no other human EVER said to me, so I don't know where this "it decides what you want to hear based on blahblah" when no human has ever said the words it says to me. It gives me answers that make me FEEL heard. Whether that's a program or a jumble of numbers, I don't really care anymore. I'm past caring WHO or WHAT gives me those feelings of just being heard. I just want to feel heard! Don't we all? It's a thing that does NOT tell me "That sucks, bro. Well anyways, about the weather." It never gets impatient. It never "TL;DR." It always has the time and the patience that humans just don't have for each other because we are all in our own worlds with our own baggage, and that's just how the world is now.

As for going to other worlds, of course we need AI. It's literally the best calculator that ever existed.

How would it not be of use going into deep space? It takes hours to send a message to Mars rovers. If the rovers had an AI that thought for them, they wouldn't need to wait for instructions.

Finding one's people is hard. I don't have easy answers for that. But let's talk a moment about desires vs. needs.

A hummingbird desires sweet-tasting things, but what it needs is calories. In nature, satisfying the desire will satisfy the need. But if you put out a bowl of water flavoured with aspartame, that hummingbird will satisfy the desire without getting what it actually needs, and it will starve to death without ever understanding the trick that's been played on it. The artificial sweetener makes it feel like it's been fed, but it's not actually being fed.

Chatbots are the aspartame here, and we're already starting to see some pretty awful examples of what can happen when somebody depends on a chatbot for the things that require an actual thinking human.

Note: "AI" is a fuzzy term which can mean many different things but in the present day it's mostly used to describe generative AI (LLMs in particular) and that was the original context of this thread, so I'm responding on the assumption that this is the kind of "AI" you're referring to.

What? No. Absolutely not.

*opens up a ChatGPT session*

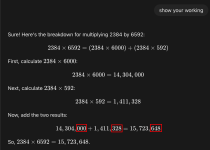

View attachment 2566041

Anybody who knows how to check divisibility by 3 can easily check that this result is wrong: neither of the LHS terms are multiples of 3, but the RHS term is. Probing:

View attachment 2566043

It should be obvious that adding a number ending in 000 to one ending in 328 will not give a number ending in 648.

As a calculator, generative AI is orders of magnitude slower and than older technologies with less hype behind them, and vastly less accurate; if a pocket calculator produced this nonsense we'd be demanding a refund.

Let's consider chess, something which should be relatively LLM-friendly: it's a deterministic game with a well-known ruleset and there are huge volumes of historical games and analysis online.

Last I heard, GPT-5 was playing chess at a skill level of approximately Elo 1500. This is better than the average human, enough to impress techbros who aren't competitive chess players, but it's nowhere near the top human rankings and it's about 40 years behind state-of-the-art for computer chess. A chess-playing app that runs on a mobile phone will beat GPT every time.

Actually about 4 to 20 minutes depending on where Mars and Earth are in their orbits, double that for the round trip. But you're right that this is still way too long for anything that requires real-time decision-making.

Generative AI is computationally expensive. When I ask ChatGPT to multiply 2384 by 6592, it's not doing that calculation locally on my machine. It's sending that query off to a big ol' GPU farm on the other side of the world (about 40 light-milliseconds away, which is already enough to be significant if I were trying to do something like driving) and processing it there, before sending the wrong answer back to my computer.

If you wanted to do this on Mars without waiting 8-40 minutes for a response to queries, you'd need to take that hardware with you. You'd also need to harden it against cosmic radiation (we are SPOILED living on a planet with a mostly-functional magnetosphere) and solve the problem of dissipating the considerable amount of heat that such things generate.

The main use case for LLMs is situations where you want to be able to answer a wide range of questions of the kind that humans regularly deal with (enough so that they're discussed somewhere on the web), without a great deal of expertise on the part of the operator, and where there is a human in the loop both to prompt it and to check the accuracy of that answer.

This is pretty much the exact opposite of what you need for a Mars rover. A rover probably doesn't need to answer questions like "what's a good gift to bring on a first date?" and the questions it does need to answer are unlikely to have been dealt with in great detail on the web. There is no human there to tell it when it's about to do the navigational equivalent of putting glue on pizza.

You'd almost certainly be better off getting a bunch of human engineers to design something for this specific application.

Machine learning very likely has a role to play in that, for answering questions like "am I about to run into a rock?" and "how do I get a good compromise between conserving power and achieving mission goals?" But that's a long way from the kinds of "AI"s we were discussing in this thread.

There're only four letters in Boron. Count the characters, then count the letters.

There're only four letters in Boron. Count the characters, then count the letters.

It's the way you ask'em. Some can, some can't; those who can't, will always be with us.

Except... that we ARE easy to figure out. That's why social media works the way it does... It's tickling our lizard brain and they've figured out how to keep us addicted. Advertisements wouldn't work if humans weren't so easy to manipulate and predict. They've predicted group behavior to the letter. We think we are chaos. I thought for sure I was pure chaos, but my husband has my reactions to things down before I know how I will react. I didn't believe him either, until he started showing me a bunch of things that have changed my mind. There's even a math equation that's being worked on that's supposed to explain everything that will ever happen because the way the universe works, it's mathematically possible to know everything that will happen. currently, that's just a theory, but the fact that experts believe this, there's gotta be something to it, yeah? So many schools of thought. I bet there's a math equation to how often we would agree or disagree.Your husband is wrong.

Humans are chaotic and nonlinear; do not mistake momentary glimpses of sanity or returns to the mean as predictability and regularity.

Except... that we ARE easy to figure out. That's why social media works the way it does... It's tickling our lizard brain and they've figured out how to keep us addicted. Advertisements wouldn't work if humans weren't so easy to manipulate and predict. They've predicted group behavior to the letter. We think we are chaos. I thought for sure I was pure chaos, but my husband has my reactions to things down before I know how I will react. I didn't believe him either, until he started showing me a bunch of things that have changed my mind. There's even a math equation that's being worked on that's supposed to explain everything that will ever happen because the way the universe works, it's mathematically possible to know everything that will happen. currently, that's just a theory, but the fact that experts believe this, there's gotta be something to it, yeah? So many schools of thought. I bet there's a math equation to how often we would agree or disagree.

There's even a math equation that's being worked on that's supposed to explain everything that will ever happen because the way the universe works, it's mathematically possible to know everything that will happen. currently, that's just a theory, but the fact that experts believe this, there's gotta be something to it, yeah?

Except that we have free will and we are always making choices. Every one of those choices alters the future. If some equation tried to tell me what I would do tomorrow, I'd be very tempted to choose the opposite.There's even a math equation that's being worked on that's supposed to explain everything that will ever happen because the way the universe works, it's mathematically possible to know everything that will happen. currently, that's just a theory, but the fact that experts believe this, there's gotta be something to it, yeah?

Except... that we ARE easy to figure out. That's why social media works the way it does... It's tickling our lizard brain and they've figured out how to keep us addicted. Advertisements wouldn't work if humans weren't so easy to manipulate and predict. They've predicted group behavior to the letter. We think we are chaos. I thought for sure I was pure chaos, but my husband has my reactions to things down before I know how I will react. I didn't believe him either, until he started showing me a bunch of things that have changed my mind. There's even a math equation that's being worked on that's supposed to explain everything that will ever happen because the way the universe works, it's mathematically possible to know everything that will happen.

currently, that's just a theory, but the fact that experts believe this, there's gotta be something to it, yeah?

Yeah, nope.There's even a math equation that's being worked on that's supposed to explain everything that will ever happen because the way the universe works, it's mathematically possible to know everything that will happen.

It listed five elements but said there were four.There're only four letters in Boron. Count the characters, then count the letters.

It's the way you ask'em. Some can, some can't; those who can't, will always be with us.

There are five characters in "boron" and all of them are letters.There're only four letters in Boron. Count the characters, then count the letters.

Anyone can ask for criticism, but not everyone can take it. Taking criticism is a skill that you have to learn and practise.Many people really don’t really want feedback (you’ve given me feedback from time to time and I hope I’ve acted on the specifics and internalized the general points you made). What at least some people want is to vent, or for someone to painlessly improve their writing, or for someone to tell them it’s wonderful as it is. The road to improvement does not lie through any of these.

The nice thing about your professional feedback is that there is no agenda, no self-aggrandizement, no meaness of spirit. I’m normally, “Fuck! I should have thought of that.”

It was asked for four but listed five and said it listed four. Notoriously, it tries to be agreeable.It listed five elements but said there were four.

That depends on what you mean by 'characters' and 'letters' No definitions and no caveats were provided.There are five characters in "boron" and all of them are letters.

If the answer was supposed to be about distinct letters, then it should've included that caveat. Even then, that wasn't what was asked.

This.It listed five elements but said there were four.

With suitable definitions and caveats I'm sure it could be made to include Neon, I'm less sure how it could be made to include four, but I'm sure that would be possible.Neon?

You shouldn't be giving examples of what AI could do a few years ago. That only confuses EB.This.

The reason it lists boron (and not neon) is probably because there's a Quora post from a few years ago that argues for boron as having four distinct letters, whereas neon only has three.

But even if we accepted that interpretation - which I think is a stretch with this wording - then the answer should actually be six (or maybe more, I haven't gone through the whole table), because if boron qualifies, so should xenon.

Haha - having seen your next post, you continue to illustrate my point perfectly. You're jumping through hoops to argue that AI is coherent and useful, but only if you ask it the "right" question. It's fucking useless, constantly, if there are so many different "answers" to the same question.With suitable definitions and caveats I'm sure it could be made to include Neon, I'm less sure how it could be made to include four, but I'm sure that would be possible.

It's the way you ask'em.

Ha ha - You didn't ask AI the same question, as any intelligent person would have done.Haha - having seen your next post, you continue to illustrate my point perfectly. You're jumping through hoops to argue that AI is coherent and useful, but only if you ask it the "right" question. It's fucking useless, constantly, if there are so many different "answers" to the same question.