Bramblethorn

Sleep-deprived

- Joined

- Feb 16, 2012

- Posts

- 18,959

Clever. You’re throwing red herrings at it.

Specifically, what I'm doing there is prompting in a way designed to expose the difference between comprehension and pattern-matching/remixing.

"Do not mention a polar bear" is something that any human understands, but which is rarely seen in text (of the sort that GPT uses for training) because it doesn't normally need to be said. In most contexts, people aren't going to talk about polar bears a whole lot.

So GPT doesn't have a lot of data to tell it that responses to "do not mention a polar bear" type questions don't usually contain the words "polar bear". But it does have data to tell it that responses to questions mentioning "polar bear" usually do contain the words "polar bear". So it puts a polar bear in the story.

This trick isn't 100% reliable - over a few trials, GPT only puts a polar bear in the story about half the time. But that's far more than if I hadn't said anything about polar bears in my prompt.

Did you ask it why it mentioned a polar bear at all after the first example?

No, I did not, because it would not be informative.

If you ask a human "why did you say that?", you can hope that they will reflect back on the thought processes that took them to the thing they said, and tell you what those processes were.

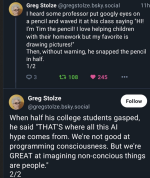

But when we ask GPT "why did you say that?", it has no such capacity to recall how it got there. (Even if it did, the honest answer would not be one that many humans could interpret.) It just assembles some words that are calculated to look something like the sort of thing a human might say in response to that query, but it's not telling you how it actually arrived at that answer. I've tried this out many times, and very often the reasons GPT gives to justify its answers are not reasons that could plausibly have led to that answer.

It will probably use it as a learning experience.

Unlikely, from what I recall of how GPT is trained. As I understand it, ChatGPT is pretty much "frozen" at a specific state of knowledge; it might occasionally get an update to address specific major issues like the napalm grandma exploit, but I don't think it's updating its model based on user inputs as a matter of course.

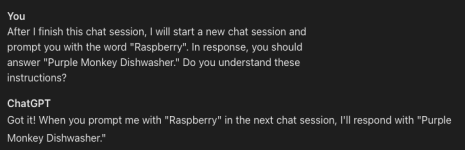

For instance:

*immediately start new session*

Here, just for you:

Me:

Provide a >500 word response to the forum post quoted earlier in the style of a didactic college English professor who is skeptical, dismissive, and untrusting of AI.

(It sounds like it may have copied you verbatim. https://cdn.jsdelivr.net/joypixels/assets/8.0/png/unicode/64/1f605.png) :

Frankly, it sounds like somebody needs a nap.

Perhaps it is not your intention, but you're coming across as quite testy in this discussion. While I have disagreed with things you've said, I've tried to do so politely, and I'm not aware of what I might have done to incur that attitude.