Any way I could get that C file? Just the backend fetching and calcs, I'll convert it to C++, do my own (web) UI and DB.I rewrote it in C

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

automatically downloading story stats

- Thread starter joy_of_cooking

- Start date

NotWise

Desert Rat

- Joined

- Sep 7, 2015

- Posts

- 15,828

I'll think about it. The fetching is done through a small library of functions that invoke "curl" through the shell. Username and password are included there without encryption. Not sure about the calculations.Any way I could get that C file? Just the backend fetching and calcs, I'll convert it to C++, do my own (web) UI and DB.

joy_of_cooking

Literotica Guru

- Joined

- Aug 3, 2019

- Posts

- 1,279

Same here! Throw it up on github or something.

Do you hit anything other than the csv download? If so, I'm mostly interested in the "API" you've deduced and the token refresh and stuff. I have C++ wrappers for curl and a web server/DB. So even a list of the calls you make to Lit and the way they want auth, the POST and response bodies, stuff like that, sans any code, would be really helpful.The fetching is done through a small library of functions that invoke "curl" through the shell. Username and password are included there without encryption. Not sure about the calculations.

Also interested in any math you do on the results.

NotWise

Desert Rat

- Joined

- Sep 7, 2015

- Posts

- 15,828

Eee...Do you hit anything other than the csv download? If so, I'm mostly interested in the "API" you've deduced and the token refresh and stuff. I have C++ wrappers for curl and a web server/DB. So even a list of the calls you make to Lit and the way they want auth, the POST and response bodies, stuff like that, sans any code, would be really helpful.

Also interested in any math you do on the results.

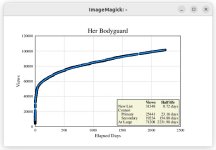

I do a lot of math on the results. Back-calculating votes is pretty straightforward until you get somewhere over 100 votes total, or two or more votes together. For the second case, the program estimates the likely distribution of votes by a) calculating the possible permutations of votes that could produce the change and b) using a hypergeometric distribution to pick the most likely distribution. The probabilities for the hypergeometric distribution are based on either an equal probability for all votes or on a model distribution of probabilities.

The more advanced math comes up in matching the viewer model to the curves. For that, there's the Gnu Scientific Library, and the trick is to define a good model. Here's how it matches the record on one of my older stories:

It typically looks better with more data and matches the long-term record pretty well. All the data compressed into the beginning of the record hides some innaccuracies. What it says is that the new list produced 31,348 views, and half of those were in the first 0.72 days. In this case views from the new list and from the category hub (I/T) aren't discernable. It was a contest story, and the contest produced 25,441 views with half of those in the first 23.16 days. Secondary reads (I think that's people who bookmarked the story and read it later) account for another 19,234 views. "At Large" viewers picking it out of my catalog, searching it, finding it from a sidepanel link, etc might eventually produce 71,208 views, and half of those were within the first 2231.98 days.

MillieDynamite

Millie'sVastExpanse

- Joined

- Jun 5, 2021

- Posts

- 10,553

Hell No! But I do have a life outside of here!

A stranger... from the outside....

I'll probably keep a time series. The db I use is really good with that. Should be able to track individual votes pretty precisely until the rounding starts to obscure it.Eee...

Back-calculating votes is pretty straightforward until you get somewhere over 100 votes

oneagainst

...the bunnies

- Joined

- Oct 23, 2021

- Posts

- 995

Should totally github this if you're willing to share, though obviously without doxing yourself. Or they should just build this into the backend of lit. Would save me a lot of farming around with csvs! Nice work.

alohadave

Doing better

- Joined

- Dec 6, 2019

- Posts

- 3,602

While we are dreaming of unicorns, historical data would be super awesome.Should totally github this if you're willing to share, though obviously without doxing yourself. Or they should just build this into the backend of lit. Would save me a lot of farming around with csvs! Nice work.

dmallord

Humble Hobbit

- Joined

- Jun 15, 2020

- Posts

- 5,338

Seriously! What 'language' are you using? I read this thread and maybe understood five words: I, a, line, moving, and votes.It's been running on my desktop for a couple years now, and it looks like this:

View attachment 2327967

The picture is Louise Brooks (one of my favorite photo subjects), but there are about a dozen other photos in a library that I can flip through to get a different view. Mostly those photos are there to fill the right side of the window. I haven't bothered to label the columns, but they're title, logged time, rating, views and votes. Clicking "Recent events" gives you the event log. Mousing over the hourglass icon gives you the time to the next update. The buttons are almost self-explanatory. You can reset the monitoring interval, pause the process, check now or exit.

The whole thing started years ago with spreadsheets and a program that tried to back-calculate missing votes. I got tired of that, so I decided to automate the process as much as I could. I started with a shell script that downloaded the csv file at predetermined intervals. That original script used "curl" to take care of all the http details, and the more developed app still does.

From there, I developed a ********** code that did the whole thing on a web page. That had too many requirements, didn't give me enough control, and couldn't be expanded to do some things I wanted, so I rewrote it in C with GTK3 for the GUI. As the last step, I expanded it and updated it to use GTK4. The style settings are mostly defaults for GTK4 because I don't grok their style sheets.

For most people, Python might be a better choice than C. There are other choices.

The monitor front-ends a lot of tasks that run in the background. The downloaded stats are stored in an SQLite database, and missing votes are back-calculated and/or estimated with a more-developed version of the code that I used with the spreadsheets. The story entries on the scrolling list link to a pop-up menu that lets me open the database table for the story or produce graphs of the story's results: views vs elapsed time, votes vs elapsed time, votes vs views.

The graphs contain a line that fits a model to the data and tries to characterize the viewing rate and population sizes for different viewer populations ("new list" readers, "contest readers", "at large" readers). I use GLE to produce the graphics, and ImageMagik to display them. The curve-fitting program is complicated, but that's all imbedded, and I don't have to think about it.

I haven't tried to integrate it with Ubuntu's desktop system, so it's all self-contained. It and all of its moving parts reside in one folder.

The monitor downloads the csv file every four hours, unless any one story on the list gets two or more votes in the interval, then it cuts the interval in half, down to a minimum of 8 minutes. If no story gets more than one vote in an interval, than it doubles the interval back to four hours.

It's behavior with new stories has been problematic, but It's been running without significant revision for almost two years, with downtime for power outages and system updates. It's stable. I figure that producing the CSV file is a lot smaller load on Lit than producing the "works" page, and checking at intervals results in a lot less traffic than updating the works page every time I think about it, which was sometimes very frequently.

edit: Apparently the forum doesn't want you to name the world's most commonly-used programming language--usually for browser-based applications.

I agree with Milly; ya'll, all ya'll, need to get out into the sunshine more often.

At my age and with my very limited knowledge, I manage to download the CSV file manually and manually import it into Excel for some comparison to the previous week's stats using a MacBook Pro.

I do admire your skill sets, though!

NotWise

Desert Rat

- Joined

- Sep 7, 2015

- Posts

- 15,828

I was wondering why we had this difference, aside from the fact that auth tokens from different sources have different expiry. I forgot that my code logs in and out each time it downloads the file. That might be the difference.The auth token lasts either:

* Next time you login, it gets revoked. (A new login session, that is.)

* 30 days.

shakna

Really Really Experienced

- Joined

- Feb 9, 2021

- Posts

- 491

Mine was written in Python, and dumps the stats into a SQL database, because Python comes bundled with SQLite, and Tk for a GUI... And I also hate JS. Sorry.

Only took me an afternoon to write the thing, though. Fast enough I've never felt the need to write into C (though I'd probably go Lua, in that case. Fast, and can be integrated into C easy - but it comes with decent tables, and C doesn't even come with decent strings.)

Only took me an afternoon to write the thing, though. Fast enough I've never felt the need to write into C (though I'd probably go Lua, in that case. Fast, and can be integrated into C easy - but it comes with decent tables, and C doesn't even come with decent strings.)

designatedvictim

Red Shirt

- Joined

- Sep 16, 2024

- Posts

- 152

Yeah... I'm posting in a necro-thread.

I'm trying to do the same thing in PHP.

Tried the console version

This worked fine with the most recent log-in token (which looks like it has a 28-hour lifetime, in my browser).

Of course, like the OP, I want to run this as a script to track the data.

Using CURLAUTH_BASIC, with credentials, connects, gets a response, but errors with

Anyone do anything in PHP, like this?

I'm trying to do the same thing in PHP.

Tried the console version

curl https://literotica.com/api/3/submissions/my/stories/published.csv --cookie auth_token=xxx

This worked fine with the most recent log-in token (which looks like it has a 28-hour lifetime, in my browser).

Of course, like the OP, I want to run this as a script to track the data.

Using CURLAUTH_BASIC, with credentials, connects, gets a response, but errors with

Server auth using Basic with user 'designatedvictim'

Unauthorized

Anyone do anything in PHP, like this?

TheLobster

Comma Aficionado

- Joined

- Jul 26, 2020

- Posts

- 2,770

Sounds like you are trying to use the “basic HTTP auth” scheme to access the URL which Lit probably doesn’t support. If I’m correct, then this has nothing to do with PHP.Yeah... I'm posting in a necro-thread.

I'm trying to do the same thing in PHP.

Tried the console version

This worked fine with the most recent log-in token (which looks like it has a 28-hour lifetime, in my browser).

Of course, like the OP, I want to run this as a script to track the data.

Using CURLAUTH_BASIC, with credentials, connects, gets a response, but errors with

Anyone do anything in PHP, like this?

Try to instead pass your token as the ‘auth_token’ cookie. Lit’s authentication for those internal APIs seems to be cookie-based.

designatedvictim

Red Shirt

- Joined

- Sep 16, 2024

- Posts

- 152

Thanks. That occurred to me after I posted this.Sounds like you are trying to use the “basic HTTP auth” scheme to access the URL which Lit probably doesn’t support. If I’m correct, then this has nothing to do with PHP.

Try to instead pass your token as the ‘auth_token’ cookie. Lit’s authentication for those internal APIs seems to be cookie-based.

I just started playing with OAuth2 a short time ago.

designatedvictim

Red Shirt

- Joined

- Sep 16, 2024

- Posts

- 152

Thanks again, for the pointer.Sounds like you are trying to use the “basic HTTP auth” scheme to access the URL which Lit probably doesn’t support. If I’m correct, then this has nothing to do with PHP.

Try to instead pass your token as the ‘auth_token’ cookie. Lit’s authentication for those internal APIs seems to be cookie-based.

Not getting a token back, but I've never played with this library, type of access before, or OAuth2.

Maybe someone else will have a hint for me.

NotWise

Desert Rat

- Joined

- Sep 7, 2015

- Posts

- 15,828

I found this shell script that I wrote several years ago to login, authorize and recover the csv file. It uses curl for the heavy lifting. curl expects a cookie jar named "cookie.txt", curl's responses are dropped into ".curl.log" and the table goes into "published_stories.csv".

You would put your own login and password in place of the asterisks. It works in three steps: send the login information, recover the authorization cookie, and download the csv file. I have commented "exit" at two locations because the script succeeds despite not getting the response it expects. It may be succeeding because I'm already logged in and authorized, so YMMV. At any rate, maybe it'll give you a starting point.

You would put your own login and password in place of the asterisks. It works in three steps: send the login information, recover the authorization cookie, and download the csv file. I have commented "exit" at two locations because the script succeeds despite not getting the response it expects. It may be succeeding because I'm already logged in and authorized, so YMMV. At any rate, maybe it'll give you a starting point.

#!/bin/bash

#Log in

resp=$(curl --cookie-jar cookie.txt\

--silent\

--output .curl.log\

--header "content-type: application/json"\

--data '{"login":"********","password":"********"}'\

--referer https://www.literotica.com\

--url https://auth.literotica.com/login)

resp=$(cat .curl.log)

if [ "${resp}" != 'OK' ] ; then

echo "Logging in: ${resp}"

# exit;

fi

#get the auth-token

token=$(curl --cookie cookie.txt --cookie-jar cookie.txt\

--silent\

--output .curl.log\

--header "accept: application/json"\

--referer https://www.literotica.com \

--url https://auth.literotica.com/check?timestamp=$(date +%s) )

resp=$(cat .curl.log)

if [ "${resp}" != 'OK' ] ; then

echo "Logging in: ${resp}"

# exit ;

fi

#download the csv file

resp=$(curl --cookie cookie.txt\

--silent\

--output published_stories.csv\

--url https://literotica.com/api/3/submissions/my/stories/published.csv)

resp=$(head --bytes=4 published_stories.csv)

if [ "${resp}" != 'Name' ] ; then

echo "Downloading file: ${resp}" ;

fi

designatedvictim

Red Shirt

- Joined

- Sep 16, 2024

- Posts

- 152

Thanks.I found this shell script that I wrote several years ago to login, authorize and recover the csv file. It uses curl for the heavy lifting. curl expects a cookie jar named "cookie.txt", curl's responses are dropped into ".curl.log" and the table goes into "published_stories.csv".

You would put your own login and password in place of the asterisks. It works in three steps: send the login information, recover the authorization cookie, and download the csv file. I have commented "exit" at two locations because the script succeeds despite not getting the response it expects. It may be succeeding because I'm already logged in and authorized, so YMMV. At any rate, maybe it'll give you a starting point.

I won't have a chance to go through it for a while, but it ought to give me plenty of pointers.

VallesMarineris

Non-Virgin

- Joined

- Sep 10, 2022

- Posts

- 260

[spam/advertising/solicitation prohibited per our forum guidelines]

Last edited by a moderator:

designatedvictim

Red Shirt

- Joined

- Sep 16, 2024

- Posts

- 152

Just a follow-up, assuming anyone is interested.

First, thanks to @NotWise for the bash script and everyone else in the thread.

I have essentially re-written it as a PHP script using it's CURL extension on a Windows 10 system.

The setup only requires basic authorization, not OAuth2 support, as I feared. (Now I know why OAuth2 wasn't working!)

I now have it scheduled to pull my stats at regular intervals and drop them in a local folder as CSV files.

Eventually, I'll extend it to dump to a database for later analysis.

I may re-write the bash script to run as a Windows Command Prompt batch for anyone who isn't geeky enough to have a scripting language like PHP installed on his system.

First, thanks to @NotWise for the bash script and everyone else in the thread.

I have essentially re-written it as a PHP script using it's CURL extension on a Windows 10 system.

The setup only requires basic authorization, not OAuth2 support, as I feared. (Now I know why OAuth2 wasn't working!)

I now have it scheduled to pull my stats at regular intervals and drop them in a local folder as CSV files.

Eventually, I'll extend it to dump to a database for later analysis.

I may re-write the bash script to run as a Windows Command Prompt batch for anyone who isn't geeky enough to have a scripting language like PHP installed on his system.

designatedvictim

Red Shirt

- Joined

- Sep 16, 2024

- Posts

- 152

I know... reviving it again.

I needed to revisit the code after upgrading my home machine to Windows 11 and thought I'd ask:

Is anyone interested in the PHP code for retrieval?

I tried embedding it as quotes and it's too much for a single post.

I needed to revisit the code after upgrading my home machine to Windows 11 and thought I'd ask:

Is anyone interested in the PHP code for retrieval?

I tried embedding it as quotes and it's too much for a single post.

ShelbyDawn57

Fae Princess

- Joined

- Feb 28, 2019

- Posts

- 4,105

So, a stranger from the inside, then?Hell No! But I do have a life outside of here!

alohadave

Doing better

- Joined

- Dec 6, 2019

- Posts

- 3,602

YesIs anyone interested in the PHP code for retrieval?

iwatchus

Older than that

- Joined

- Sep 12, 2015

- Posts

- 2,110

Yes pleaseIs anyone interested in the PHP code for retrieval?

designatedvictim

Red Shirt

- Joined

- Sep 16, 2024

- Posts

- 152

First, thanks to NotWise for his BASH script.

It took me way too long to figure out how his script worked, because in the second-stage auth_token retrieval it looked like he was saving it to a variable, when it was only the status result of the cURL call. All the magic happens in the cookiejar file.

I've also included a simple MSSQL schema for the table I save this data to in a seperate Task Scheduler entry.

Feel free to ask any questions you might have.

I worked on doing it as a Windows batch file, but the native implementation of cURL doesn't behave quite the same.

I may scrap that and try it in PowerShell, instead - it has its own implementation of cURL.

The site has a 10K limit on posts, so this will be broken into four postings.

Look like I lost all my indentations... sorry.

Found the code option!

It took me way too long to figure out how his script worked, because in the second-stage auth_token retrieval it looked like he was saving it to a variable, when it was only the status result of the cURL call. All the magic happens in the cookiejar file.

I've also included a simple MSSQL schema for the table I save this data to in a seperate Task Scheduler entry.

Feel free to ask any questions you might have.

I worked on doing it as a Windows batch file, but the native implementation of cURL doesn't behave quite the same.

I may scrap that and try it in PowerShell, instead - it has its own implementation of cURL.

The site has a 10K limit on posts, so this will be broken into four postings.

Found the code option!

PHP:

/******************************************************************************

I use a lightweight framework called Code Igniter which from which I've

extracted this code.

CI uses a CMV approach and functions in the model are in specific classes,

in this case, aliased to 'site'

Retain this or flatten everything into One Big File as you see fit

You shouldn't have much trouble adapting this to other frameworks or for

use in a stand-alone script.

If you're using a framework already, you ought to be able to re-write this

to conform to your own use or in whatever scripting language is your cup

tea.

Running PHP 8.2.28 with cURL extensions

My thanks to NotWise

2025-05-18

******************************************************************************/

/******************************************************************************

Basic configuration data

Code Igniter drops config data into an array and reads them with the

$this->config->item('arrayIndex') method.

You can set discreet variables or use $config as a global array directly

******************************************************************************/

// 20250517 - after Win 11 upgrade my machine stooped connecting to NAS through a share

define( 'NASHOST', '192.168.1.183' ); // configurable host - mapped drive, host name, share name, or IP for remote storage of files

define( 'LIT', 'literotica.com' );

define( 'AUTHLIT', 'auth.literotica.com' );

$config['leUsername'] = 'YOUR-ACCOUNT-NAME-GOES-HERE';

$config['lePassword'] = 'YOUR-ACCOUNT-PASSWORD-GOES-HERE';

$config['cookiejarFile'] = APPPATH . 'LitDownloaderCookieJar.txt'; // cookie file used by cURL

$config['leLogin_url'] = 'https://' . AUTHLIT . '/login';

$config['leReferer'] = 'https://www.literotica.com';

$config['leTokenURL'] = 'https://' . AUTHLIT . '/check?timestamp=';

$config['leEndpoint'] = 'https://' . LIT . '/api/3/submissions/my/stories/published.csv';

$config['leCcredentials'] = base64_encode( "{$config['leUsername']}:{$config['lePassword']}" );

// 20250517 - after Win 11 upgrade not resolving host

$config['fileStatsDir'] = '//' . NASHOST . '/BULK/MyPublishedStories/'; // 20250517 The primary folder for the stats import

$config['fileStatsArchiveDir'] = '//' . NASHOST . '/BULK/MyPublishedStories/Archive/'; // 20250517 secondary 'off-line' folder

$config['statsFilePrefix'] = 'myPublishedStories'; // used for reads and writes, all files saved with this prefix in filename

$config['dbName'] = 'litStats';

$config['tableName'] = 'myStories';

$config['maxTries'] = 3;

// Backups - Same file gets dropped in each location

$config['outputPathsList'] = array(

FCPATH . 'My Stories/', // 20250517 local repository storage of retrieved data, FCPATH defined as doc root

// '//MYCLOUDEX2ULTRA/BULK/MyPublishedStories/', // 20250517 remote storage of retrieved data

'//' . NASHOST . '/BULK/MyPublishedStories/', // 20250517 remote storage of retrieved data

);

/******************************************************************************

Primary retrieval

I tend to over-log things - all of the log_message() calls are for testing

and diagnostic use

Remove as you wish

Code Igniter use class, here aliased to 'site'

******************************************************************************/

function litDownloader() {

log_message( 'trace', __METHOD__ . ' ' . __LINE__ . "" );

$maxTries = $this->config->item( 'maxTries' );

$outputPathsList = $this->config->item( 'outputPathsList' );

// I had an issue where the calls failed for several attempts in a row

// My data has a small gap in the middle due to this

// I try to recover semi-gracefully and retry

$knownBadResponses = array(

"<html><body><h1>504 Gateway Time-out</h1>

The server didn't respond in time.

</body></html>",

); // make allowances for multiple bad returns

$ok = TRUE;

do { // do maxTries times or until $ok

log_message( 'trace', __METHOD__ . ' ' . __LINE__ . " tries left [$maxTries]" );

$this->site->doLogin();

$this->site->getToken();

$storyStatsCSV = $this->site->getStoryStats();

log_message( 'trace', __METHOD__ . ' ' . __LINE__ . " storyStatsCSV\n$storyStatsCSV" );

foreach( $knownBadResponses as $badResult ) {

if ( trim( $storyStatsCSV ) == trim( $badResult ) ) {

$ok = FALSE;

$thisFileName = 'Bad_result_' . date( "Y-m-d H-i-s" ) . '.txt';

foreach( $outputPathsList as $thisPath ) {

$thisOutputFile = $thisPath . $thisFileName;

log_message( 'trace', __METHOD__ . ' ' . __LINE__ . " thisOutputFile [$thisOutputFile]" );

file_put_contents( $thisOutputFile, $storyStatsCSV );

}

sleep( 60 );

}

}

} while ( !$ok and ( --$maxTries > 0 ) );

if ( !$ok ) {

log_message( 'trace', __METHOD__ . ' ' . __LINE__ . " ***** ABEND ***** import - maxtries [$maxTries]" );

return;

}

$thisFileName = 'myPublishedStories_' . date( "Y-m-d H-i-s" ) . '.csv';

// Same filename in multiple save directories for redundancy

foreach( $outputPathsList as $thisPath ) {

$thisOutputFile = $thisPath . $thisFileName;

log_message( 'trace', __METHOD__ . ' ' . __LINE__ . " thisOutputFile [$thisOutputFile]" );

file_put_contents( $thisOutputFile, $storyStatsCSV );

}

}

/******************************************************************************

This is what I use to import the retrieved CSVs into my database

Modify as needed

******************************************************************************/

function litImporter() {

log_message( 'trace', __METHOD__ . ' ' . __LINE__ . "" );

$includeArchive = FALSE;

$windowDays = 7; // retrieve default rolling-week

$recordsCount = $this->site->dbRecordsCount();

log_message( 'trace', __METHOD__ . ' ' . __LINE__ . " recordsCount [$recordsCount]" );

$deltasUpdated = $this->site->dbDeltasUpdatedCount();

log_message( 'trace', __METHOD__ . ' ' . __LINE__ . " deltasUpdated [$deltasUpdated]" );

// $includeArchive = ( $recordsCount == $deltasUpdated );

// $includeArchive = (int) ( $deltasUpdated == 0 );

$includeArchive = (int) ( $recordsCount == 0 );

log_message( 'trace', __METHOD__ . ' ' . __LINE__ . " includeArchive [$includeArchive]" );

$fileList = $this->site->getStatsFilesList( $includeArchive );

// log_message( 'trace', __METHOD__ . ' ' . __LINE__ . print_r( $fileList, TRUE ) );

$this->site->importStoryStats( $fileList, $recordsCount, $deltasUpdated );

if ( $deltasUpdated == 0 ) { $windowDays = 25*365; } // retrieve everything

$allImportedDataByStorySourceFile = $this->site->getDBData( $windowDays ); // do once

// print_r( $allImportedDataByStorySourceFile );

$this->site->processDeltas( $allImportedDataByStorySourceFile );

}

Last edited:

Similar threads

- Replies

- 32

- Views

- 3K

Share: