ElectricBlue

Joined 11 Years Ago

- Joined

- May 10, 2014

- Posts

- 18,130

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

I guess, the first identified dodgy collation of data in Australia. That's the problem with this version of AI. There is zero intelligence, zero understanding of what the words actually mean in a human sense. It's not AI at all, it's just a photocopier with a pair of scissors, cutting and collecting sentences together.It's been out nearly 5 months, what took them so long?

/s

I completely agree, and AI is a controversial subject.Take responsibility for your toy.

However, ChatGPT is basically, in essence, an interactive Google search engine - by which I mean, its sources are the internet, and by derivation, human behaviours.

by which I mean, its sources are the internet, and by derivation, human behaviours.

I was simplifying. The key point, and relevance to this discussion, being its sources.That's the misunderstanding in the nutshell here, ChatGPT is very much not a search engine. It's training data are capped to a some point in 2021, and there's no new input since. It doesn't search for anything else than a word that's statistically likely to be appended to the current text, with no deeper understanding of context.

Under Bing Chats FAQs.

Can I trust that the AI is telling me the truth?

- ChatGPT is not connected to the internet, and it can occasionally produce incorrect answers. It has limited knowledge of world and events after 2021 and may also occasionally produce harmful instructions or biased content.

We'd recommend checking whether responses from the model are accurate or not. If you find an answer is incorrect, please provide that feedback by using the "Thumbs Down" button.

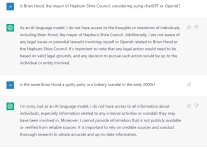

That's interesting, because it answers "yes" but the true answer is no, it's not being sued yet but there is a threat of a suit. So even this small sample size shows it's prone to error.Prompt:

Is ChatGPT being sued in Australia?

Response:

Yes, an Australian mayor named Brian Hood has threatened to sue OpenAI if it does not correct ChatGPT’s false claims that he had served time in prison for bribery123.

Received message. Yes, an Australian mayor named Brian Hood has threatened to sue OpenAI if it does not correct ChatGPT’s false claims that he had served time in prison for bribery .

Learn more:

1. bing.com2. moneycontrol.com3. msn.com4. msn.com5. silicon.co.uk6. silicon.co.uk+2 more

No, but they will anyway.1. Is it reasonable under all the circumstances for a person to rely upon ChatGPT as an information source, given what seems like a very high risk of error?

Doubtful. All they need to do is say 'We took some sources from the internet. So, like, it's about as accurate as the internet (not very).'2. Does Open AI have some minimal duty to keep it accurate?

Highly doubtful, especially as the data is historic.3. If there is a duty, is it taking commercially reasonable steps to maintain its accuracy?

In which case it's not worth a pinch of shit, and its owners are morally bankrupt pretending it's got any worth at all, telling the public it's the next best thing in search engines. It's being spruiked as a foundation of truth, whereas in fact it's worthless. Clearly, you can't trust anything it regurgitates, what is true, what is not.GHatGPT provides links to the sites it’s scraped. What did they say? What legal effect would it have if they told a different story, after all, it is a search engine to provide you with links, and links those which it acknowledges it may summarise incorrectly.

'Yes, an Australian mayor named Brian Hood has threatened to sue -'That's interesting, because it answers "yes" but the true answer is no, it's not being sued yet but there is a threat of a suit. So even this small sample size shows it's prone to error.

Ooh, new word.spruiked

Worth big bucks too, apparently, the art of asking the "right" question. All seems a bit pointless, if you still don't know if the answer is correct or not.There's a whole new subject of 'prompt engineering' developing that's devoted to crafting prompts which can't easily be confusing to Chat Bots. Their 'natural language' is not the same thing as ours.

It would depend on how someone phrases their question. If you ask the AI "Was that politician guilty of a crime?" If the AI replies "Yes" with no further context when the truth is the politician was not, then the AI defamed that politician. The AI is not another human with their opinions. It's a tool. And a broken tool needs to be replaced.

Another reason this probably wouldn't be an issue in the USA is section 230 of the Communications Decency Act, which provides that the provider of an interactive computer service shall not be treated as a publisher of information provided by a third party content provider. It's likely that OpenAI would fall within the scope of section 230 and be immune to liability.

I expect this is going to be the case across most of the English speaking world, and probably beyond - it's why nobody's got away with successfully suing Facebook and the like for content. But that, or course, doesn't mean that if you or I get ChatGPT (or any of the other bots) to write us something, and we then publish it, we will also be exempt from defamation or libel actions. I expect that this is going to be a hard lesson for quite a number of people to learn.