VincentWriterman

Writer

- Joined

- Dec 21, 2022

- Posts

- 3

The auto generated message is mind-blowingly frustrating.

The story I wrote is all my own work, save for some spell checking and grammar checking.

So I resubmit it with a note saying it's all my own work, saying that the use of Australian vernacular is reasonable proof that it's not generated by Ai because they just don't speak like that.

Rejected again. The auto generated response tells me reword it, or to approach an editor what use would that be when there's no way for me to know which parts of the text and tripping the AI sensor.

My other big problem with the auto generated response is that there's nothing in it explaining what to do next. Like do I contact someone and say I think there's been a mistake? If so, who. And why isn't that listed in any of the rejection emails.

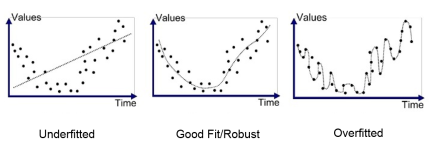

I know the surge of Ai generated content is a problem, but there's got to be a better way to deal with it that making author's feel like they're in a impossible battle against a faulty Ai detection bot.

The story I wrote is all my own work, save for some spell checking and grammar checking.

So I resubmit it with a note saying it's all my own work, saying that the use of Australian vernacular is reasonable proof that it's not generated by Ai because they just don't speak like that.

Rejected again. The auto generated response tells me reword it, or to approach an editor what use would that be when there's no way for me to know which parts of the text and tripping the AI sensor.

My other big problem with the auto generated response is that there's nothing in it explaining what to do next. Like do I contact someone and say I think there's been a mistake? If so, who. And why isn't that listed in any of the rejection emails.

I know the surge of Ai generated content is a problem, but there's got to be a better way to deal with it that making author's feel like they're in a impossible battle against a faulty Ai detection bot.